Industrial temperature measurement and control are two of the most dynamic subsets of the process control industry. Sensors are manufactured in every size, shape, length, and accuracy imaginable to fulfill a specific temperature application. In many cases, probes and probe assemblies are chosen not based on the application’s success, but on other factors such as price, expediency, or availability.

The calibration of an industrial temperature sensor should be well thought out in the early design stage of the process. Doing this early on ensures a better match of the sensor to the application, which means better overall accuracy and reduced intrinsic uncertainty.

To correctly calibrate a temperature sensor in use or before its use, four critical factors must be considered:

- Understanding the dynamics of the entire process

- Choosing the sensor that best fits the application

- Calibrating the system to best emulate the process

- Managing the re-calibration of the sensor to assure quality assurance

Industrial process temperature measurements are more critical than ever. Attempts to improve the quality or efficiency of industrial processes has led to a rapid increase in the number of temperature sensors installed in these systems as well as increased requirements for measurement accuracy. There are many sources of measurement error present in these systems. This discussion will focus on the sensors themselves. Temperature sensors are generally designed for a particular measurement application, not the ease with which they can be calibrated or supported. The resulting variety of shapes, sizes, and types may limit the calibration accuracy and often compounds an already difficult support situation. In some cases, the sensors chosen for an application may not be the best choice for the measurement attempted in that application, creating additional measurement error.

To reduce the measurement error to acceptable levels, all aspects of the process measurement and its traceability must be considered. This includes the suitability of the sensor to perform the measurement of interest (its match to the process), as well as the calibration of the sensor and its stability in the field. Best results are obtained when this is accomplished early in the design of the process or prior to the actual implementation of the measurement scheme. It is often much more difficult and costly to salvage an inadequate measurement system than to install or implement a satisfactory measurement system in the first place.

To that end, there are four critical factors to consider which directly influence the capability of the measurement system.

- Understanding the dynamics of the entire process being measured

- Selecting the sensor that adequately fits the application

- Calibrating the sensor in a way which best emulates the process

- Managing the calibration of the sensor to assure quality assurance

Process evaluation

The first step in designing a temperature monitoring or control application is a thorough examination of the process itself regarding the dynamic thermal properties of the process. Some of the questions which should be answered are listed below.

- What is the origin of the heat?

- What is the mode of transmission?

- Is the process static or dynamic?

- Why is it being monitored?

- What accuracy is required?

- Will the sensor be subjected to harsh environment?

- Are there contaminants or chemicals present?

- What are the implications of measurement errors?

Let’s assume that you need to monitor a typical autoclave independent of the unit’s internal control. You will want to understand the thermal dynamics inside the unit. What is generating the heat? How does it flow or move inside the sterilizing area? How does the heat make its way to the inserted labware? How does this flow affect the placement of the application sensor? Is there a gas purge or vacuum which may affect the sensor? Are contaminants being released from the treated items which may affect the sensor?

Based on the information that you gather on the internal heat workings of the sterilizer; you can better determine the placement and type of sensor required. One location may be better than another. Several tests may be required to understand all the temperature issues. Only after you have a firm grasp of these details you can begin to look at the best way to monitor its temperature.

Sensor selection and placement

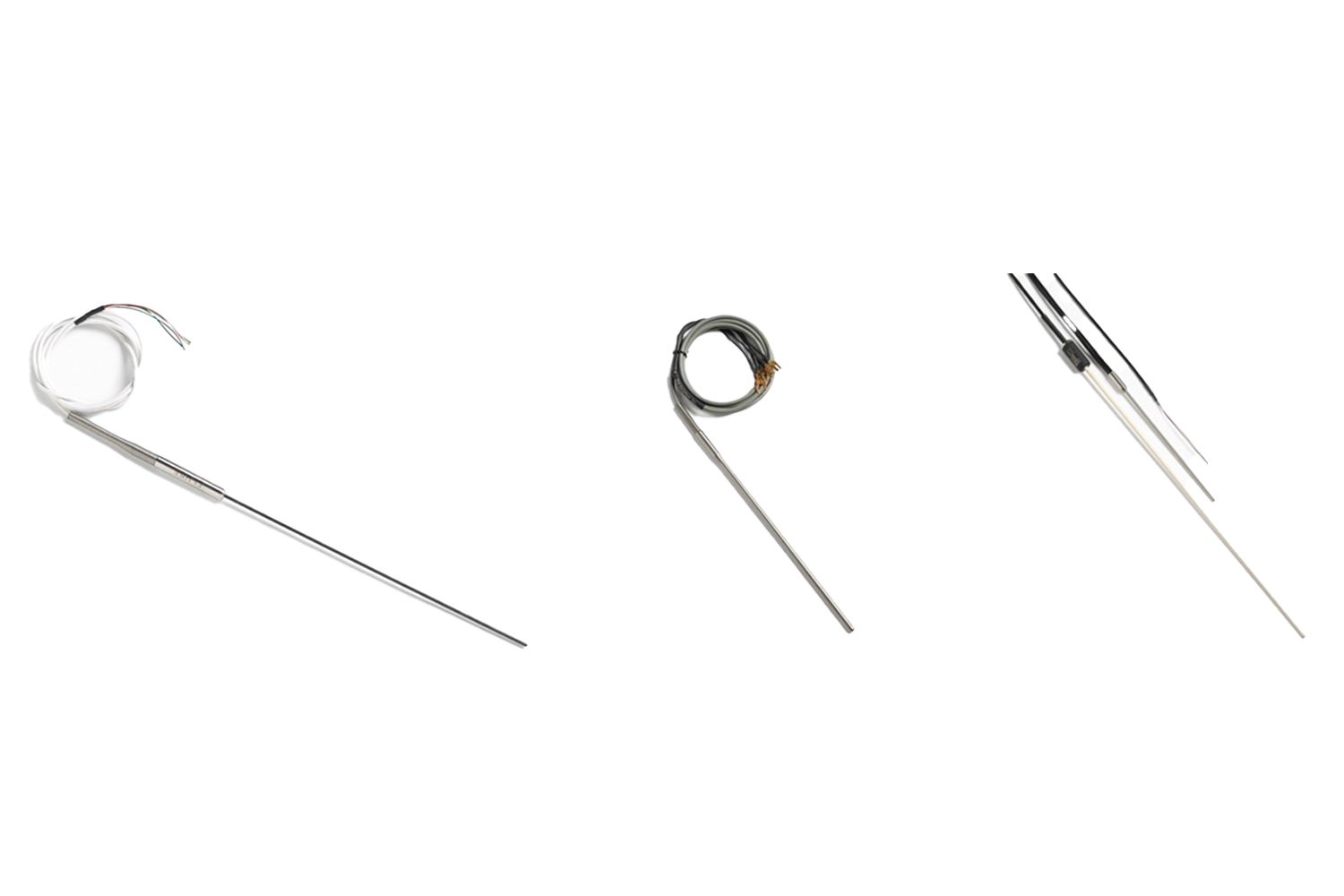

Sensor selection and placement are critical factors. The accuracy limits of the measurement depend more on the sensor selection and placement than any other single aspect. This sounds so fundamental but is often overlooked. The sensor should fit the application. Select an air probe for gas measurements and an immersion probe for liquid measurements. Consider stem effect when specifying the length and diameter. Understand how the sensor is affected by the installation and subsequent heat treating (or cold quenching for cryogenic applications). Make sure that the wire and transition junctions can withstand any extreme temperature that they may be subjected to and that they are hermetically sealed to prevent moisture ingress if necessary.

Select the type of sensor (PRT, thermistor, or thermocouple) based on the temperature range, accuracy requirements, calibration requirements, sensitivity, size, and your electronics. PRTs can be used for high accuracy requirements over a relatively wide temperature range. Thermistors can also provide high accuracy but only over a narrow temperature range. Thermocouples are often used successfully for low accuracy or for high temperature applications, or for applications where harsh environments are encountered.

As mentioned above, keep in mind how exposure to the process being measured will affect the sensor or limit its usefulness. For example, many bake-out ovens and high temperature industrial autoclaves use exposed type K thermocouples for the control sensor. We might assume that since K wire is satisfactory as a control sensor, it will be satisfactory as a monitor sensor. However, type K wire degrades very rapidly in these environments, and, if it is not protected from the product gasses or the autoclave vacuum, it will give incorrect measurements after only one use. Either a different, more suitable sensor, or a method of protection for the type K thermocouple must be used to provide good results.

Consider also how the process will stress the sensor. If the sensor just barely meets the required temperature range, the sensor will likely drift more rapidly. Drift is usually accelerated by duration at extreme temperatures. Keep in mind the length of time the sensor will be exposed to any extreme temperatures. Some types of sensors and assemblies are very susceptible to vibration. If the sensor will be exposed to vibration, make sure you choose a sensor that can handle it. Remember, the closer the application is to the limits of the sensor, the more rapidly the sensor will drift. This isn’t necessarily bad, but it must be considered.

Another, often overlooked, fundamental is the placement of the sensor. The sensing area of the sensor should be placed in the critical temperature area. Sometimes this is as simple as choosing a proper position or location in a chamber or oven. Often, this is satisfied by placing the sensor close to the test items or the items being exposed to the process. Occasionally, however, proper placement may be inconvenient, difficult, or disruptive to the process being measured. In these instances, the importance of an accurate measurement must be balanced with the difficulty of correct placement.

For example, if you want to measure the temperature of a fluid in a pipe, the sensor should be positioned inside the pipe, if possible, not attached to the outside of the pipe. Measuring the surface temperature of a pipe will not give a true indication of the temperature of the fluid. If the measurement is very important, it might be worth shutting down to process to facilitate installation. The type of sensor, placement, and desired accuracy should be considered together. Because of physical limitations, some sensor types may be better suited to certain installations. For example, thermistors are often very attractive candidates when a precise measurement is required in a very tight space. Although a thermocouple might fit, accuracy would be limited. In this example, placement and accuracy requirements point to a specific sensor type.

Another example would be a situation with a pipe mentioned above. If the fluid in the pipe is an abrasive slurry, requiring the use of a thermowell, the effect that the thermowell has on the accuracy of the measurement might require that a more accurate sensor be used to compensate for losses. Alternatively, the thermowell might place limits on the accuracy attainable such that a lower cost, lower accuracy solution might just as well be used. Whatever the situation, the sensor type, placement, and accuracy requirements are interrelated and should be considered in a unified manner to arrive at the best solution.

Sensor calibration

Temperature sensors are transducers and require calibration and periodic recalibration (we will discuss the management of recalibration later). Because of the variety of types, shapes, sizes, and other unique characteristics, calibration of process temperature sensors can pose a real challenge. Since the goal is to produce a calibration which is valid in the installation, we must pay close attention to how the sensor is used. There are two basic approaches to this goal which we will discuss in the following section. The first approach pertains to the sensor itself. If we have some flexibility in sensor selection, we can choose a sensor which is easy to calibrate and has characteristics which allow this calibration to be valid in the installed system. Consider an application with a pipe that is 6 inches in diameter. A 4-inch-long probe could be inserted into the pipe to make the temperature measurement and would probably do a satisfactory job. However, a probe of this length can be difficult to calibrate, particularly if high accuracy is required. A better solution might be to use a small diameter probe 6 or 8 inches in length with thin wall construction. The small diameter and thin wall will allow high accuracy with minimal immersion, while the extra length will provide for easier calibration.

The second approach pertains to the calibration of the sensor. The calibration should be performed in a manner which models the thermal characteristics of the process as closely as possible. The ideal situation would be to calibrate the sensor in situ by comparison to reference equipment. The reference sensor is placed in proximity and the comparison is performed. If this is not possible and the sensor has to be removed, then the calibration should be set up considering the application. Immersion probes should be calibrated by immersion. If the probe is immersed only 4 inches, then immerse it 4 inches for calibration if possible. If the probe measures surface temperature, then the calibration should be performed on a surface calibrator. If you need better accuracy than that of a typical surface plate, perhaps a surface fixture can be built into a calibration bath to improve the situation. Another consideration is the readout device used in the process. The readout device used for calibration of the sensor should use similar excitation current as the readout used in the process. Resistance type sensors exhibit self-heating due to the power dissipation of the sensor element. Large errors can be present if a different current is used in calibration than in normal use.

Often, the best solution is to calibrate the sensor and readout together as a system. This is easily accomplished in the field with the portable baths or drywell calibrators which are available. When this method is used with consideration to the thermal properties of the process as discussed above, excellent results can be obtained. The most important thing to remember about calibration is that measurements obtained with temperature sensors are very dependent upon conditions. If the thermal conditions are different in calibration than those in the process, measurement errors will result. The errors can be as small as a few hundredths of a degree to as large as several tens of degrees. Accurate measurements can only be assured when the application is understood, and any nuances are taken into consideration when the sensor is calibrated.

Calibration management

Some sensor types are more stable than others, but all temperature sensors drift with time and use and require recalibration. Recalibration intervals should be established based upon the stability of the sensor type, the thermal and physical stresses experienced by the sensor during use, and the implications or cost of measurement errors introduced by sensor drift. This requires a thorough understanding of the sensor type, sensor configuration, and process variables. If you have knowledge of these variables, then you can set an appropriate initial calibration interval. Some of this information is easy to determine but often we must make an educated guess. For example, thermocouple drift with time at elevated temperatures and in specific environments is well established. It is easy to consult tables to help establish initial calibration intervals. On the other hand, the specific effect that vibration in a process may have on a particular PRT design may not be known. In these cases, it is prudent to establish a short initial calibration interval and lengthen it as history builds. The cost and inconvenience of recalibration must be balanced with the criticality of the measurement. Important measurements warrant shorter calibration intervals where less critical ones may allow longer intervals. By understanding how the application affects the sensor, as well as the importance of the measurement itself, a recalibration interval can be established which will satisfy your requirements.

Temperature applications are so dynamic that any number of factors can affect the accuracy of your measurements. Some of the important issues which must be considered include the thermal properties of the process, placement of the sensor in the measurement area, the capabilities of the sensor, the “fit” of the sensor to the application, and calibration and calibration management of the sensor itself. Careful analysis of these aspects will provide a deeper understanding of your applications, aid in reducing the measurement errors to an acceptable level and help you to make a better contribution to your company’s bottom line.